The ai safety incubation program

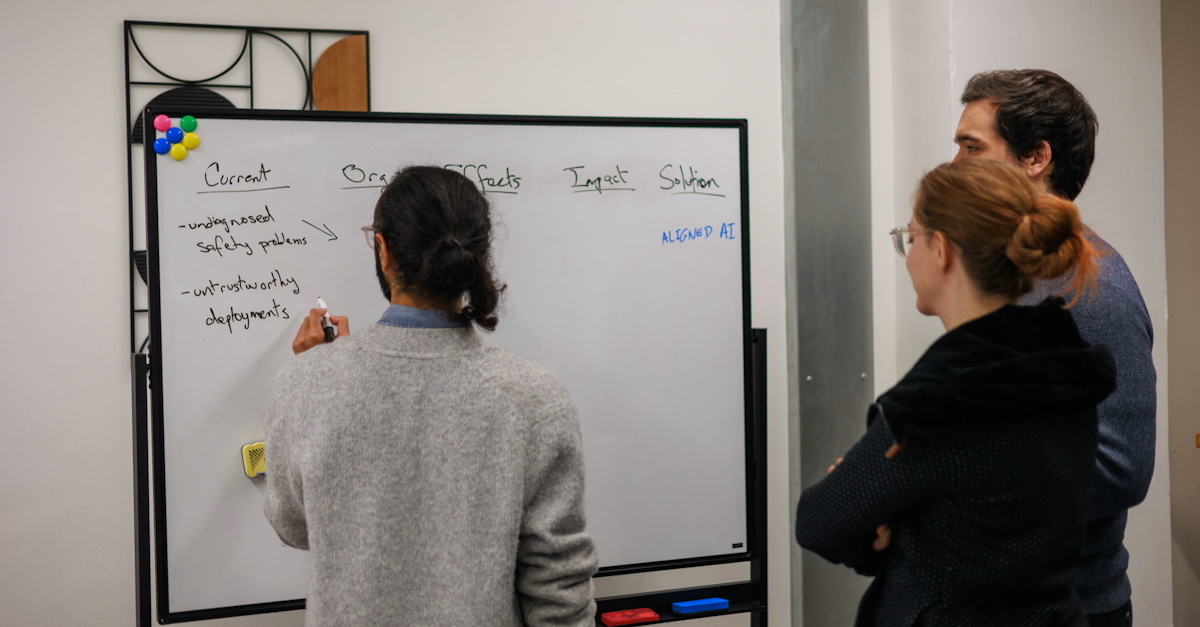

Our incubation program brings together founders, advisors, and funders to enable them to advance the AI safety field together. Our program is open to incubating both non-profit and for-profit companies.

The information below is about our last program. We are currently not accepting applications, but we encourage you to express your interest to take part in a future iteration of the program.

Join the next program

Why this program?

We are at a critical juncture in AI development. A recent survey of 2,778 AI researchers revealed that nearly half estimate at least a 10% chance of advanced AI leading to catastrophic outcomes, including human extinction [1]. Despite this, only 1-3% of AI publications focus on safety [2]. The world urgently needs more dedicated AI safety research to address these existential risks and shape the future of AI responsibly.

Our program is your starting point to make a positive impact in the AI Safety field. We facilitate:

- Finding a co-founder: Thoroughly test your co-founding fit with others who complement your skills and share your values.

- Mentor & advisors: Access support from the Catalyze Impact team and external industry experts & experienced entrepreneurs.

- Funding opportunities: Connect with our non-profit seed funding circle and potential investors, while being supported by a requestable stipend during the program.

- Network & community: Immerse yourself in a group of fellow AI safety founders and grow your network in the London AI safety ecosystem.

- Build up your organization: Work on the priorities within your up-and-coming organization, while making use of external support whenever you want to.

Whether you're starting from scratch or already have a co-founder and initial concept, our program is designed to help you quickly move forward. We focus on equipping you with the skills, funding and network to truly make a difference in AI safety.

We are a non-profit focused on advancing the AI Safety field and our mission is to make you succeed by incubating and accelerating your idea. Catalyze will not take a stake in any incubated company, whether for-profit, or non-profit. Your organization will be entirely under your control.

You will receive tailored support from the Catalyze Impact team And from external experts, such as:

Gábor szorad

Founded several companies and has led teams of 5 to 8,200 in managerial, c-level before shifting his career to focus on AI safety full-time

Chris akin

COO of Apollo Research, an AI evals and interpretability research organization, and has launched and led several social impact businesses

Program overview

This program is a pilot and consists of two phases. If you already have a co-founder and organization idea, you can join us for Phase 2 without joining Phase 1. If so, you will have the opportunity to indicate this in your application.

Phase 1: Co-founder Matching

Location: Online.

Time commitment: 12-20 hours a week for 5 weeks

between 4 November and 8 December.

During the initial online program, you will be part of a cohort of around 15 individuals committed to improving AI Safety, which will help you expand your network of AI Safety professionals.

Participants will:

- Define their ideal co-founder profile.

- Collaborate in rotating pairs on a variety of useful projects to test co-founder fit with the other participants.

- Further develop their research organization proposals.

Participants have a strong say in who they test their fit with and will decide themselves who they want to co-found with.

Towards the end of Phase 1 you will evaluate whether you want to commit to moving to Phase 2 with the co-founder you found, and we will assess whether you and your co-founder are a good fit for Phase 2. Advancing to Phase 2 is primarily based on forming a promising founding team during Phase 1. While having a well-developed organization concept is beneficial, we value teams that are open to refining or pivoting their ideas.

After Phase 1, there will be a four-week break during which you will prepare for Phase 2 by further developing your organization plan with your identified co-founder and arrange to join us full-time in-person in London for Phase 2.

Phase 2: In-Residence Organization Building

Location: London.

Time commitment: full-time for 4 weeks between 6 January and 2 February. For exceptional candidates we may allow part-time participation instead.

You and your co-founder(s) will work together in-person to focus on the very early stages of building your organization. While you focus on taking the next steps in building your organization, preparing to fundraise, and further stress-testing your co-founding fit, we provide various forms of support, such as:

- Bookable office hours with a network of experienced mentors and advisors.

- A requestable stipend of up to two months to enable you to work on your organization full-time.

- A community of fellow AI Safety research organization founders and organized networking opportunities in the wider London AI Safety ecosystem.

- Tailored support from the Catalyze Impact team, such as resources, connections, and coaching.

- Access to a non-profit seed funding circle that you can apply to at the end of the program and introductions to potential investors.

Post program

After the program, we will provide continued individualized support for our graduates and continued access to the Catalyze community.

Who we are looking for

1. You are highly-commited

You are highly-committed, a self-starter, and have a lot of grit. Starting an organization is difficult, but it can have an incredible impact.

2. You are motivated to contribute to AI Safety

You aspire to create meaningful, positive impact in the field and believe that it is a priority to prevent severely negative outcomes from AI.

3. You have a scout mindset

You are open to alternative views, have an inclination to explore new information and arguments, and consider these critically to alter your course when new information is available.

4. You either have a preliminary plan or agenda

for an AI Safety organization, or are willing to collaborate with someone who does. You will be able to develop this further during the program

Application process

The application process consists of four steps. The process is designed to help you figure out if you would be a good fit for starting a new organization, so do not hesitate to apply if you are unsure.

- Written application: Questionnaire and CV upload (30-45 minutes).

- Test task: Demonstrate your skills and thinking (~3-4 hours).

- Interview: In-depth discussion about your background and goals (~45 minutes).

- Reference checks: We will contact up to two of your references.

We are no longer accepting applications for this round of the program. If you are interested in taking part in a future iteration, please express your interest below or sign up for our newsletter.

FAQ

While we assume a basic understanding of AI Safety, equivalent to completing an introductory course such as BlueDot’s AI Safety Fundamentals, we encourage you to apply if you are passionate and willing to learn quickly. You can access AI Safety Fundamentals course materials and work through them in your own time. Additionally, you can find more resources to get started with AI Safety here and here.

Please check the “Who Are We Looking For” section for more details.

While having a preliminary plan or agenda for an AI Safety research organization is beneficial, it is not required. We value candidates who are either prepared with initial ideas or willing to collaborate with others who have them.

The program is designed to help you develop and refine organizational concepts throughout. If you do not have an organization idea at the start, we will initially match you with other candidates who do. We will also share organization ideas during the program.

If you need some initial inspiration, you can look through lists of ideas and risks online.

Yes, you can apply together with your co-founder. Our program is flexible and accommodates both individual applicants and pre-formed teams. If you already have a co-founder and a relatively developed organization idea, you have the option to skip Phase 1 and join directly for Phase 2. When you fill out the application form, you will have the opportunity to indicate that you are applying as a team. However, please note that each team member should submit an individual application to ensure we have complete information on all participants.

Throughout this program we primarily focus on incubating technical research organizations in the AI safety field, but we are open to approaches that combine technical work with other crucial aspects of AI Safety. We are particularly looking to support:

- Pure research organizations: We incubate teams dedicated to advancing fundamental AI safety research, exploring novel approaches and tackling complex theoretical challenges.

- Product-focused research organizations: We also support teams developing practical tools and technologies that can contribute directly to improving AI safety. This includes things such as AI auditing/evaluation tools and other products that can support the governance of AI.

- Neglected research areas: We are excited about supporting ventures that address underexplored or neglected areas within AI safety.

Even if you do not find a co-founder or organization idea in phase 1, you will still benefit from skill-building and networking with other ambitious people looking to make an impact in AI Safety and become part of our network, which may lead to future opportunities. The matching program also takes part-time online, so you can participate alongside your current job or studies.

On the one hand, a benefit of joining this pilot program is that there is a lot of room for individual support and that we will flexibly play into your needs by tailoring large parts of the program to you. On the other hand, we cannot offer as much certainty regarding the precise expected outcomes (e.g. odds of receiving seed funding from our seed funding circle) as programs that have run more often. We mitigate this uncertainty wherever possible, such as by getting input from likely future funders of incubatees’ organizations in assessing your application, which increases the odds of incubatees on the program quickly getting seed funding.

No. As a charitable organization, we're focused on impact, not profit. Our program is open to incubating for-profit companies as well because we believe the scale and resources that for-profit organizations can acquire can be very beneficial in making an impact.

In our program you will retain autonomy and time to work on your personal priorities. The main support we offer is structured co-founder matching, personalized mentorship from industry experts, and access to seed funding and investor networks that would be challenging to replicate independently.

You can request a stipend of around 2200$ per month for up to two months to enable full-time work on your organization during Phase 2, as well as support to cover flights and other costs to join us in London.

Please email us at info@catalyze-impact.org for any additional questions.

Applicants must be 18 or older by 4 November, 2024. We welcome applications from both UK and non-UK citizens, and from all backgrounds. Please view the “Who Are We Looking For” section for more detail.